The primary goal of this lab is to become more familiar with performing photogrammetric tasks on aerial photographs and satellite images. This involved calculating photographic scales, measuring areas and perimeters of features, and calculating relief displacement. In addition, it also introduces stereoscopy and performing orthorectification on satellite images. After going through this lab, I will have an understanding of photogrammetric methods performed on photographs and satellite images.

Methods

The first task is calculating the scale of a nearly vertical aerial image shown in Figure 1. To find the scale we need to use the equation that compares the size of real world features to the image. The equation is Scale = Photo distance / Ground distance. In Figure 1, I used the distance from point A and B, which in real life is 8822.47ft and 3in on the photograph. After all the calculations, listed below, it is discovered that the Scale for the nearly vertical aerial image is 1:35,000.

a. S= Photo distance/Ground distance Pd= 3in, Gd=8822.47ft = 105869.64in

b. S=

3in/105869.64in

c. S=

(3/3)/( 105869.64/3)

d. S=

1/35289.88

S = 1 : 35,000

The second task also involved finding the scale of the aerial photograph shown in Figure 2. This time I had to use the equation: Scale = Focal length / height of aircraft - terrain height. I was given the altitude of the aircraft, focal length lens, and elevation of the AOI. After the calculation, listed below, the scale is discovered to be 1:39,000

a. S

= Focal length/ height of aircraft – terrain height

b. Height

of aircraft = 20,000ft, focal length = 152mm, terrain height = 796ft

c. 152mm

= .498688ft

d. S

= .498688/20,000-796

e. S

= .498688/19204

f.

S = (.498688/.498688)/(19204/.498668)

g. S

= 1/38509.04

h. S

= 1 : 39,000

The third task was to measure the area and perimeter of features viewed from an aerial photograph. This method can be done the same way with any photo so I will just go over the steps on using the tool. After opening the image in Erdas Image, click on the "Measure Perimeters and Areas" tool. With that tool, digitize the feature you wish to know the perimeter and area of and double click to complete the tracing. Once you complete the tracing, the tool displays the area and perimeter of the digitized zone.

The fourth task is to calculate relief displacement using an object height from an aerial image.Using the smokestack labeled A in Figure 3, I was able to determine the relief displacement in that location. In relief displacement, the higher the object, the more displacement there will be. The following equation is used to show how far the object needs to be moved toward the Principle Point on the image.

a. D

= (h*r)/H

b. D

= relief displacement, h = height of object, r = radial distance of top of

displaced object from principle point, H = height of camera above local datum

c. h

= .4 * 3,209 = 1,283.6in, r = 10.2in, H = 3,980ft = 47,760in

d. D

= (1,283.6 * 10.2)/47,760

e. D

= .274in

f.

Move .274in toward Principle Point.

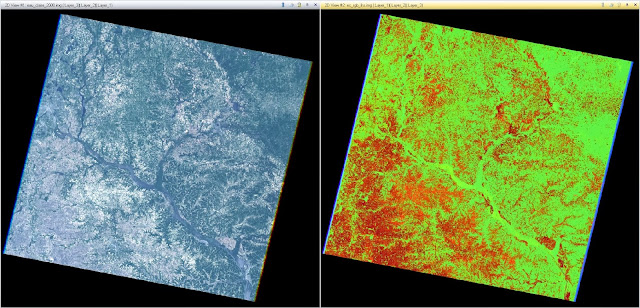

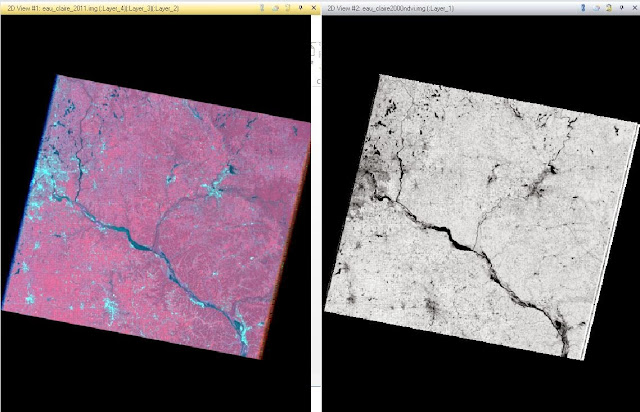

The fifth task was using two satellite images and GCPs to create a 3-dimensional perspective view of the City of Eau Claire.To get started, we need to select our two images. The first being an image of the City of Eau Claire at 1 meter spatial resolution. And the second image being a Digital Elevation Model (DEM) of the City of Eau Claire with a spatial resolution of 10 meters. To combine these images I used the Terrain - Anaglyph tool. Using the tool, the two images of Eau Claire are inputted with a vertical exaggeration of 2 and then run. The output image, Figure 4, shows the elevation changes in the City of Eau Claire when viewed with Polaroid glasses. This tool is especially helpful if you are not able to travel to the study area but need to know what the elevation looks like with the image layered over it.

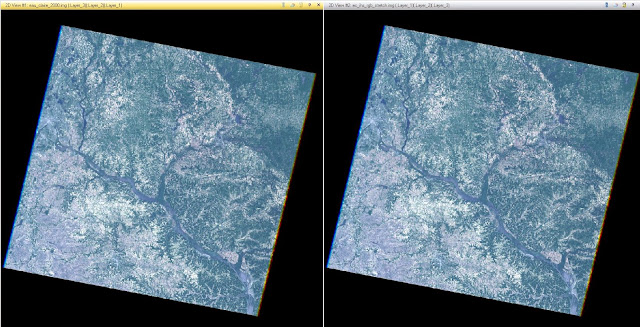

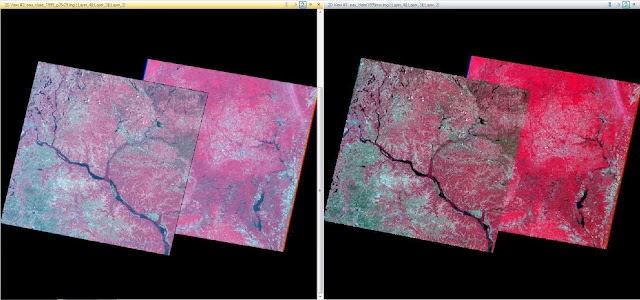

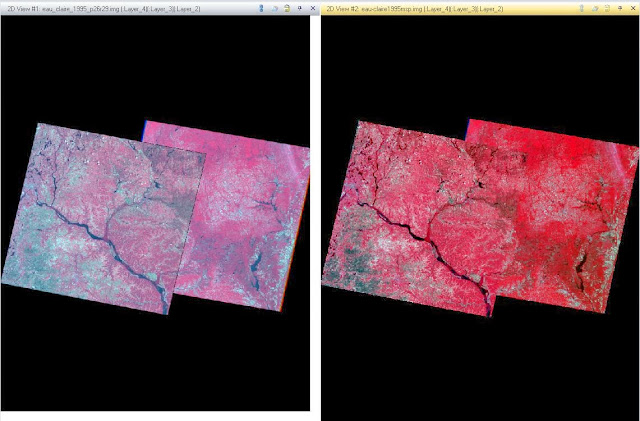

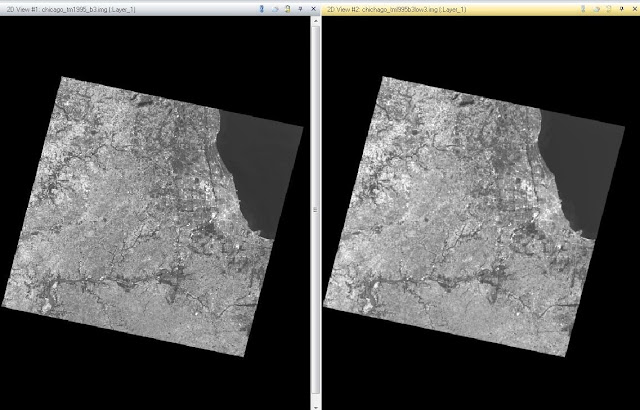

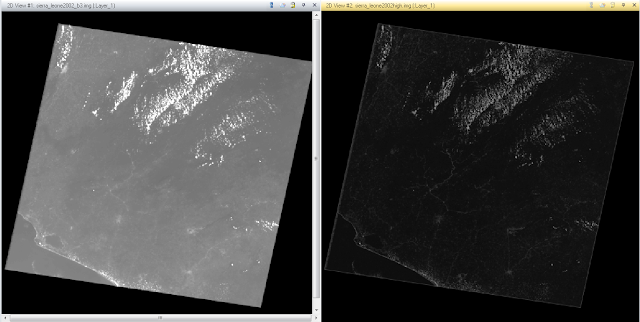

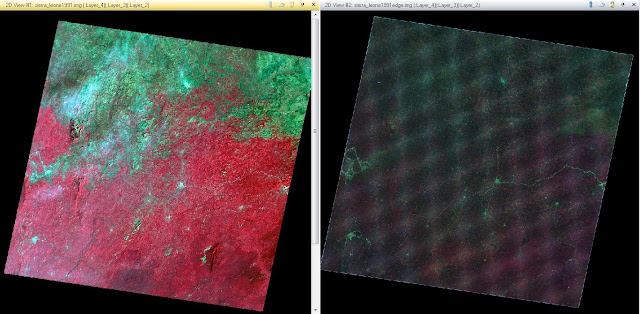

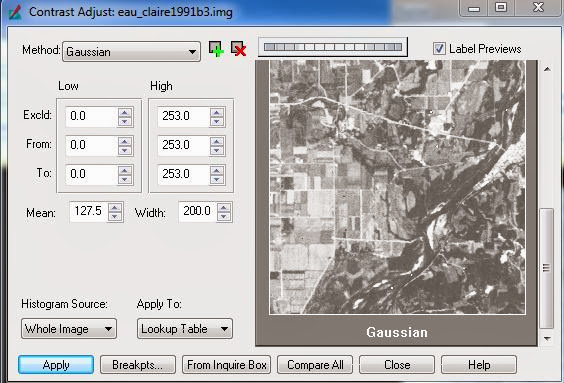

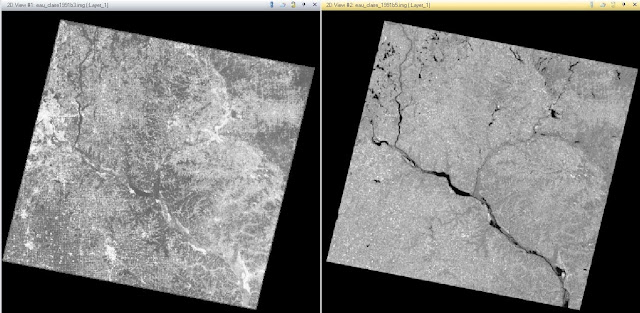

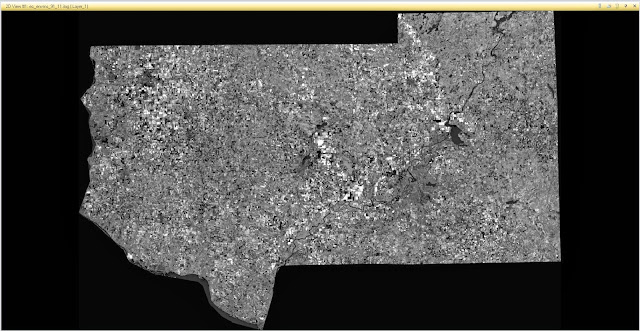

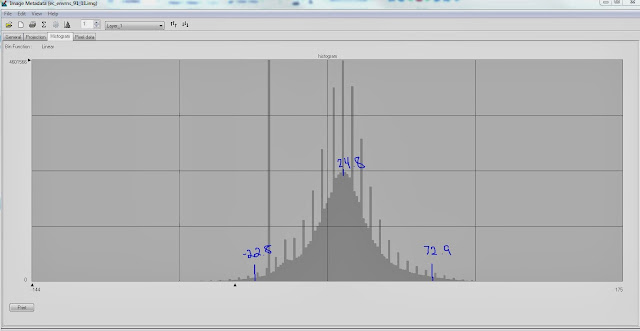

The final challenge of this lab is performing orthorectification on two images using the Erdas Imagine Lecia Photogrammetric Suit (LPS). LPS is used in digital photgrammetry for triangulation, orthorectification of images collected by various sensors, extraction of digital surfaces and elevation models. To get started, open the LPS project manager located under the Toolbox tab. Then click Create New Block File to start building a model. We want to use a polynomial-based push-broom and SPOT push-broom geometric model categories. Now that the block file is set up, we need to choose the Projection for the block. The projection type should be UTM, the Spheroid name - Clarke 1866, the Datum Name - NAD27(CONUS), the UTM zone should be 11, and finally set it to North. Now that the Block is set up, it is time to add the images to it and define the sensor model. The first image to add is the Spot_pan.img of Palm Springs, California. After adding the image, you have to verify the parameters of the SPOT pushbroom sensor by clicking Show and Edit Frame Properties and then Edit sensor information to verify the parameters. The sensor has now been verified, shown by the green colored Int. icon. Now we need to start the point measurement tool by choosing Classic Point Measurement Tool. This tool opens up three displays to assist in the collection of GCPs. Now we need the xs_ortho image added so we can reference the GCPs between images. To add the ortho image, click the Reset horizontal reference source icon and check Image layer. Then navigate to the ortho image and input it. We are going to be using that image as a reference when applying the GCPs to the spot_pan image. Now we are ready to collect the GCPs. In the ortho image, place your first point, then find the corresponding location in the spot image. Note that the points can also be inserted automatically by typing in the x,y reference coordinates. The process is then repeated until 9 GCPs are collected. The final two points are collected using another ortho image titled NAPP_2m-ortho.img with a spatial resolution of 2 meters. The final points are then added using the same method but now using the NAPP ortho image instead of the xs ortho image. Now that the reference points are collected from the two ortho images and the file coordinates from the spot image, we can apply the same changes to another spot image titled spot_panb. After bringing the new image into the Block and making sure the parameters are correct, we have to connect the two images using the points we collected earlier. Once the points have been applied to the new image, the Block image will show the two images being overlapped at the GCP locations (Figure 5). All that is left is setting the automatic tie point collection, triangulation, and re-sampling. The automatic tie point generation properties tool allows us to change the settings of Image used to All Available, Initial Type to Exterior/header/GCP, and setting the Image layer used for Computation to 1. Once that model runs, apply Triangulation. After that, you have a model to use to create orthorectifed images where relief displacements and other geometric errors have been adjusted and accuracy has been improved. The images then have to be re-sampled using Bi-linear Interpolation, with the result being the two orthorectified images. The results of the two Orthorectified images can be viewed below in Figure 6.

Results

|

| Figure 1 - Calculating Scale using S= Photo distance/Ground distance |

|

| Figure 2 - Calculating Scale using S = Focal length/ height of aircraft – terrain height |

|

| Figure 3 - Calculating Relief Displacement D = (h*r)/H |

|

| Figure 4 - 3-Dimensional perspective view of the City of Eau Claire |

|

| Figure 5 - Orthorectifed images in the LPS project manager |

|

| Figure 6 - Orthorectified images in Erdas Viewer |